Decision Tree

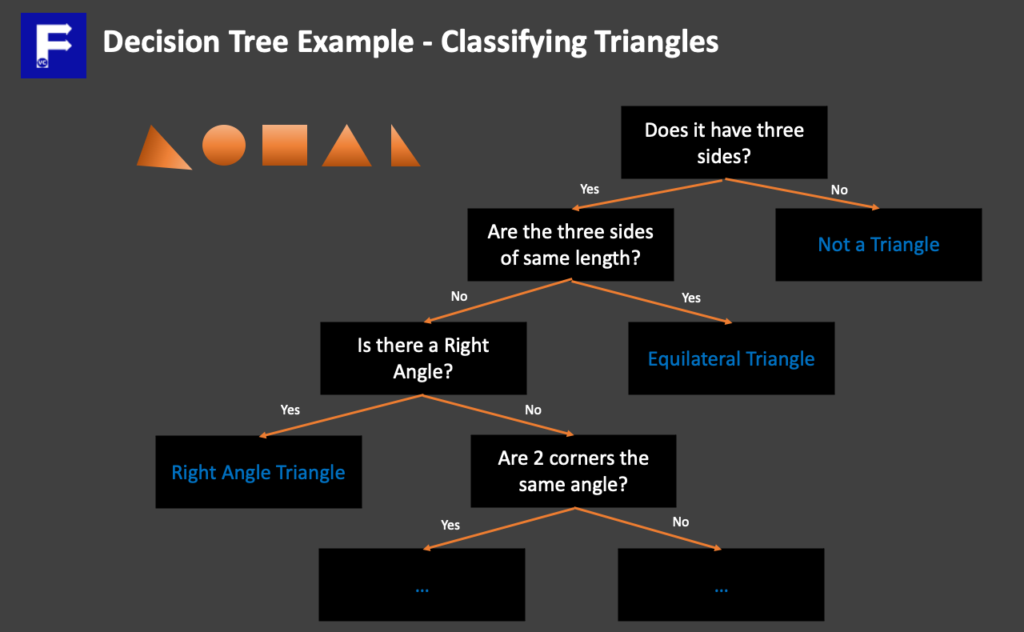

While a Decision Tree may sound like a fancy term, it is something most people are probably familiar with. Basically we attempt to classify objects based on asking a series of questions according to an object’s attributes. Accordingly the next question we ask will depend on the answer of the first. As an illustration, imagine a primary school student who has been taught to classify different triangles. By and large the first question would be whether the shape has three sides? Afterwards you may ask whether three sides are the same length? Finally you may ask whether there is a right angle? Eventually by asking questions in a successive sequence you end up coming to the conclusion whether and what type of triangle we have.

Applying Decision Trees to classify cars

Consequently we want to apply the same concept in terms of classifying our data. However, the question we can ask will depend on the features of our dataset. That is to say, we need to be able to answer the question with our existing data in order to have a meaningful question. Subsequently, we would then be able to progressively split out data as we build our decision tree. In order to illustrate how to build a decision tree, we once again attempt to classify our cars dataset into Convertible, SUVs, or Sedans. In our previous article, we looked at applying K-means clustering to classify cars. Furthermore you will need to install scikit-learn if you want to follow along our example.

import pandas as pd

from sklearn import tree

# Import our data

carlabel = carlist['Label'].copy(). # Create our label/known classifications

carlist.drop(["Car","Label"],axis=1, inplace=True)

carlist["Open Top"] = carlist['Open Top'].map({"Yes":1,"No":0}) # Create our dataset

carlist.head()

Building our Decision Tree model

Now that we have our data in the form of a pandas dataset, we next need to create a decision tree model using scikit-learn.

# We declare a tree model with max_lead_nodes being the number of categories we have

cartree = tree.DecisionTreeClassifier(max_leaf_nodes=3)

In effect the above statement generates a decision tree and that has a maximum of 3 leaf nodes. In essence this means we will product an output that will classify our data into three classes. By the same token should we want to classify more types of cars, we would modify this value. At this point, the next step is to feed our data into our model and allow scikit-learn to build our decision tree. Different from K-means clustering, not only do we need to feed our data, but we also need to tell our model the answer. Hence this is commonly referred to as a form of Supervised Learning.

# Fit our data to the model

cartree = cartree.fit(carlist, carlabel)

# Examine the trained model

tree.plot_tree(cartree)

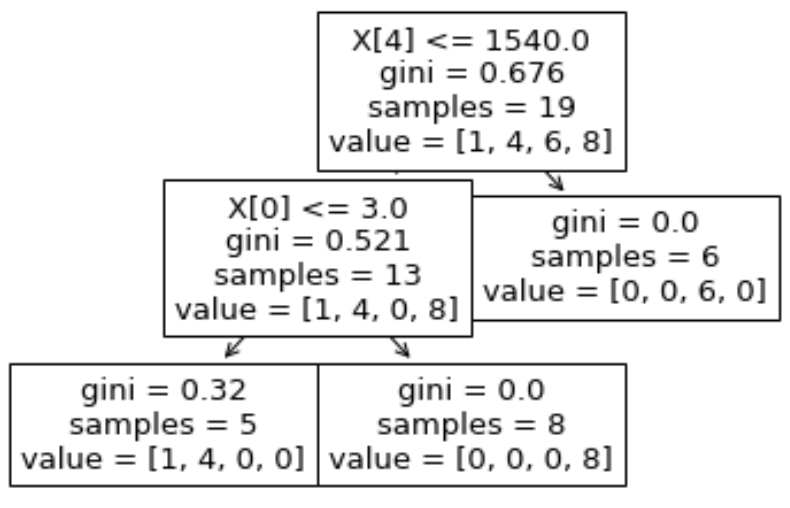

[Text(200.88000000000002, 181.2, 'X[4] <= 1540.0\ngini = 0.676\nsamples = 19\nvalue = [1, 4, 6, 8]'),

Text(133.92000000000002, 108.72, 'X[0] <= 3.0\ngini = 0.521\nsamples = 13\nvalue = [1, 4, 0, 8]'),

Text(66.96000000000001, 36.23999999999998, 'gini = 0.32\nsamples = 5\nvalue = [1, 4, 0, 0]'),

Text(200.88000000000002, 36.23999999999998, 'gini = 0.0\nsamples = 8\nvalue = [0, 0, 0, 8]'),

Text(267.84000000000003, 108.72, 'gini = 0.0\nsamples = 6\nvalue = [0, 0, 6, 0]')]

Understanding our Decision Tree

As can be seen, our decision tree model generates exactly three leaf nodes along with a lot of other information. However what does this all mean? As I have noted earlier, our decision three analyses the data and asks itself a series of questions. Firstly we started off with 19 cars in our list (samples = 19). Subsequently when X[4] <= 1540 is True we go to our left in our tree. In other words cars with height <= 1540 go left, otherwise go right. Since we have 6 cars that went to our right node and it is a leaf node (e.g. no subsequent nodes), we classified 6 cars in a specific class. Subsequently along the left node, our next question is whether X[0] <=3.0 (the number of doors is <=3). Finally this question helps us to classify our remaining cars (5 cars had doors <=3, and 8 cars had doors > 3).

Making Predictions with our Decision Tree

In order to test whether our decision tree is accurate, we would like to test our model against data it has never seen before. In order to do so, we will prepare another dataset with new data not used in our training.

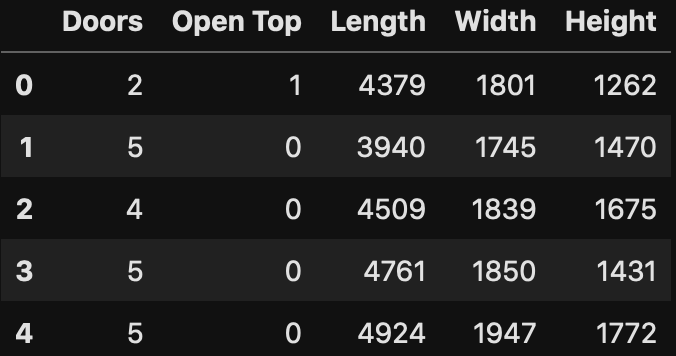

cartest= pd.read_csv("CarTest.csv").drop(["Car","Label"],axis=1) # Remove answers from dataset

cartest['Open Top']= cartest['Open Top'].map({"Yes":1,"No":0})

cartest.head()

Straightaway, we test our model by making a prediction and evaluating the generated result

# Get our predictions from test data the model has never seen before

cartree.predict(cartest)

array(['Convertible', 'Sedan', 'SUV', 'Sedan', 'SUV'], dtype=object)

# Recombine known answer with the predicted class

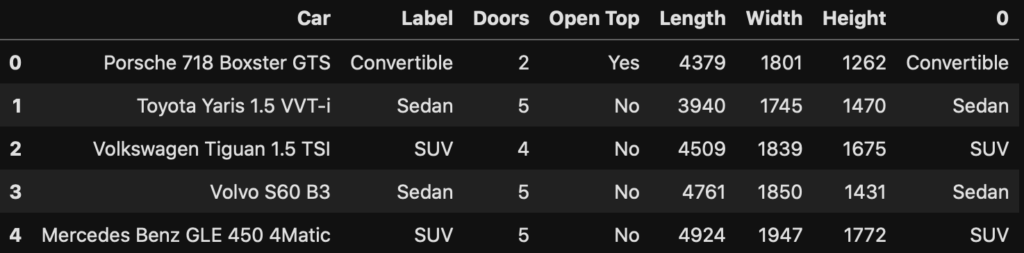

cartest_full = pd.concat([pd.read_csv("CarTest.csv"),pd.DataFrame(cartree.predict(cartest))], axis=1)

cartest_full

As shown above, our test dataset had 5 cars of different known classes. If we compare the predicted results (noted by the column on the right), we see our model was able to make a very accurate prediction of our car class.

Summary

In summary today we described the general concept of what a Decision Tree is. Additionally we saw how easy and simple it is for us to create such a model using scikit-learn. Above all we also started to dip into supervised learning, training and test data splits, and for the first time predicted outcomes from data. Certainly, we hope this has been useful for you and allowed you to start using decision trees.