Getting familiar with data

In order to gain insights into your data, getting familiar with the data itself is critical. Moreover, with a good understanding of our data, we can identify a strategy to further processing and analysis. But before getting ahead of ourselves, let’s see some of the most common functions every Data Scientist should have under their belt.

Practical Example – Toronto Housing Data

To start off with, let’s assume I have a series of Toronto Housing price data since 2015. I would like to understand what details it contains before I can see if any further trends can be observed. We read in our data as a Panda Dataframe as per below:

import pandas as pd

# Import our housing data into Pandas

HousingData = pd.read_csv("MLS.csv")

How many records do we have? – pandas.DataFrame.shape

In our example, the data is organised in a table, much like what you would expect from an Excel file or database. By reviewing the shape of our data, we can learn how many columns and how many records we have.

#Finding the shape of our dataframe

HousingData.shape

(4726, 17)Evidently our dataframe has 17 columns, and 4726 rows of data.

What are the column headers? – pandas.DataFrame.columns

Understanding that we have 17 columns, reviewing the column headers will give us more insights about our data. To do so we can use the below function.

HousingData.columns

Index(['Location', 'CompIndex', 'CompBenchmark', 'CompYoYChange',

'SFDetachIndex', 'SFDetachBenchmark', 'SFDetachYoYChange',

'SFAttachIndex', 'SFAttachBenchmark', 'SFAttachYoYChange',

'THouseIndex', 'THouseBenchmark', 'THouseYoYChange', 'ApartIndex',

'ApartBenchmark', 'ApartYoYChange', 'Date'],

dtype='object')The 17 columns have a certain description that tells us what it contains. Some are more obvious like “Date”, whilst others are abbreviated “THouseBenchmark”. Without more description, it may be impossible to truly guess what it may mean. Fortunately we know THouse in this case is an abbreviation for Town House, but it may not be so clear in all cases.

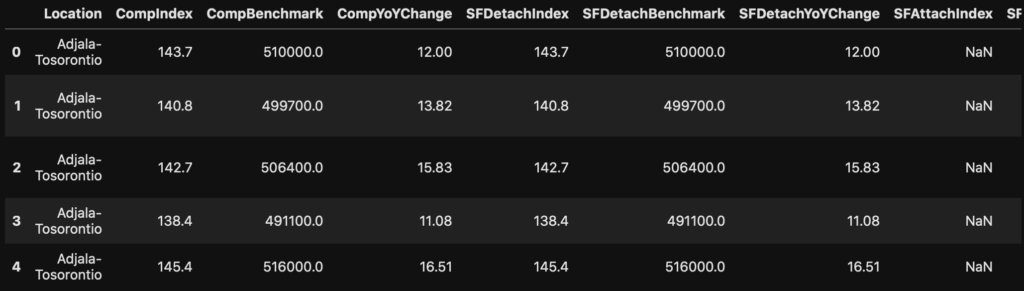

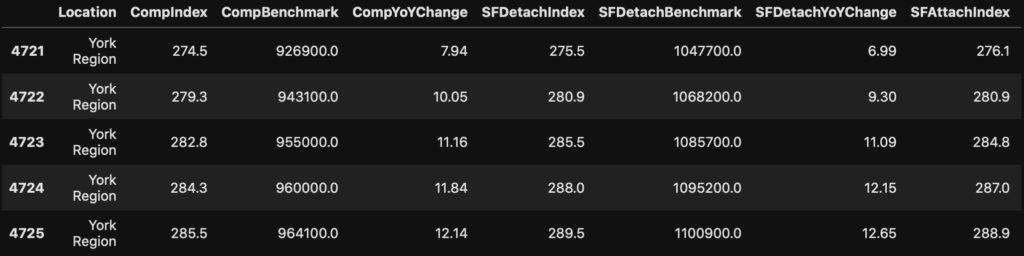

What does our data look like? – pandas.Dataframe.head / pandas.Dataframe.tail

Looking at the first few rows or last few rows of our data can also tell us what our data looks like. We learn for instance how the data is represented? In addition, formats of numbers, dates, etc.

HousingData.head()

HousingData.tail()

How is the data stored? – pandas.DataFrame.dtypes

After checking some of our data, we can see some data are numeric and some are like text. Once we know how the data are stored, we will know how we can work with them.

HousingData.dtypes

Location object

CompIndex float64

CompBenchmark float64

CompYoYChange float64

SFDetachIndex float64

SFDetachBenchmark float64

SFDetachYoYChange float64

SFAttachIndex float64

SFAttachBenchmark float64

SFAttachYoYChange float64

THouseIndex float64

THouseBenchmark float64

THouseYoYChange float64

ApartIndex float64

ApartBenchmark float64

ApartYoYChange float64

Date object

dtype: objectWe can see the column “THouseIndex” is stored as a floating point number, for instance. Secondly, the “Date” and “Location” columns are stored as an object.

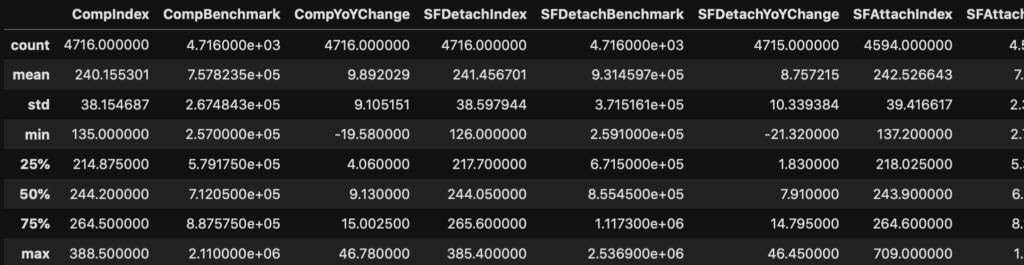

Descriptive statistics of our dataset – pandas.DataFrame.describe

On one hand, our data consists of many numerical values. On the other hand, we do not know more about these values. Undoubtedly, if only there was some way to get some statistical overview. For that reason, we can use the “describe” function to gain more insights.

HousingData.describe()

As shown above, the “describe” functions tell us some basic statistics such as the mean, count, max, or min values of each column. In brief, this becomes very handy. For instance, not all count values are the same. It is important to realise the count of “SFAttachindex” is less than for “SFDetachindex”. In fact, the “describe” function provides many useful statistics based on the type of data we have.

Identify missing data – pandas.DataFrame.isna

Missing data can easily affect how we process our dataset. For instance, you can imagine taking an average of a set of numbers can vary. Hence it is important to identify if we have any missing or null value data in our dataset.

HousingData.isna().sum()

Location 0

CompIndex 10

CompBenchmark 10

CompYoYChange 10

SFDetachIndex 10

SFDetachBenchmark 10

SFDetachYoYChange 11

SFAttachIndex 132

SFAttachBenchmark 132

SFAttachYoYChange 130

THouseIndex 1193

THouseBenchmark 1193

THouseYoYChange 1192

ApartIndex 1016

ApartBenchmark 1016

ApartYoYChange 1016

Date 0

dtype: int64As shown above, although we had more than 4000 rows of data, most columns had some values missing. For instance “THouseIndex” had the most missing values. By comparison “Location” and “Date” did not have any missing values.

Summary – Getting familiar with data

In conclusion, we have gone over some of the most basic functions to understand our data and why getting familiar with data is so important. They are:

With these basic insights, we can find ways to clean or even correct our data before we start any analysis. Check out our articles for next steps: